It happens sometimes, a problem is in your mind for a while and one day the solution suddenly comes.

I know that what I'm going to explain may be obvious to many, probably it is, but because is also quite simple and nicely low-level I like to share this post.

The problem: let suppose that I wanted to be able to compare two numbers without knowing if they are floating points or integers. This basically means that I want to find a procedure that is good for both types; we can also call it "type agnostic".

Why should I desire such a thing?

There could be more than one reason:

- Depending on the architecture int comparison can be faster.

- You don't want to put an additional if on the type, thus breaking the pipeline to choose either of procedures.

- You want to keep your OpenCL or CUDA kernels simpler and good performing as possible and so on...

For us convenience we are going to focus on 32bit comparisons but all we are going to say can be applied to 16, 64 and 128 bit.

As usual we need a little and incomplete overview... let's start seeing how int and float numbers are encoded. Of course experts can jump this part.

Signed integers in modern computers are encoded using the two's complement representation.

We all know how to count using base 2: 0, 1, 10, 11, 100, 101... etc etc

The two complement uses the first bit to store the sign: 0 means + and 1means -. So if we are talking about 32 bit unsigned integer (uint32_t) the natural number sequence will be:

0 -> 00000000000000000000000000000000

1 -> 00000000000000000000000000000001

2 -> 00000000000000000000000000000010

3 -> 00000000000000000000000000000011

3 -> 00000000000000000000000000000100 and so on...

Talking about negative numbers we already introduced the problem of dealing with the sign bit (the first one); the surprising fact is that all the other bits are reversed compared to what we would expect.

-1 -> 11111111111111111111111111111111

-2 -> 11111111111111111111111111111110

-3 -> 11111111111111111111111111111101

-4 -> 11111111111111111111111111111100

-5 -> 11111111111111111111111111111011 etc...

I think that is quite intuitive, but if you want to be a little more formal to convert from binary to two's complement notation please accept this pseudo code:

if (first_bit == 0)

decode(bits_sequence)

else

-1 * (decode(reverse(bits_sequence)) + 1)

My advice if you are new to that is to play a little bit.

You can do it taking advantage of the fact that in gdb you can change variable values and print directly the bit representation.

Coming to the floating point encoding (defined in the IEEE 754) the situation is slightly more complex (but not that much).

For32 bit systems we have:

Again we find the first bit as sign bit, and again 0 means + and 1 means -.

After having coded 8 bits as unsigned number and shifted subtracting -127 (we will understand after why), the remaining 23 bits are used for the mantissa (or fractional part).

The mantissa encoding is made like this: every bit is assigned a value that becomes smaller moving to the right. In details the first one (position 22) has value 1/2, the second (position 21) 1/4 and the third 1/8. The idea behind is pretty clear; if we take a random bit in the mantissa the sum of the values of all the right ones is always smaller! In the end when we have calculated the mantissa value we have to add 1 to them. The result is that the mantissa has a value that always ranges beteween 1 and 2.

You can aggregate sign exponent and mantissa using this formula:

Where: s represent the bit sign, m the mantissa value and e the exponent value.

Last but not least we have 2 strong exceptions to this rule:

- If all the exponent bits are 1 and all the mantissa are 0 the output is +Infinity or -Infinity (depending on the bit sign).

- If all the exponent bits are 1 and the mantissa is non zero the output is Nan.

Now that we know "everything" about number encoding at first sight we are not much further ahead than before. To find the solution for our problem we must find some kind of similarity in the comparison process between signed int and float.

Let's analyse how we would decide if signed int1 > signed int2... the procedure would be:

- Let's compare the bits sign, if they are different we already have a winner! unless...

- If both numbers are positive move from left to the right searching for the first different bit between the two numbers. The one's with the first 1 more is the greater.

- If both numbers are negative... do the same!! Because bits in two's complement notation for negative numbers are "inverted" but also the sign of the number is negative the two effects cancel out!

-1 * -1 = 1

Not only now we know one reason why the two's complement notation has been chosen, but also we have learned a really important lesson form the point 2 of the last procedure. Bits on the left are always more important compared to the the right ones, so we need only to find the first different bit to decide which number is the greater.

Now let take a look to a couple of important similarities from which we can take advantage in the floating point encoding:

- The first bit represents the sign.

- Also the bits significance decrease from left to right.

What we have to do is simply taking the floating point numbers that I will have to compare and encode them in this new way. After that for greater/smaller comparison operations I'll be able to forget completely that they actually are floating point numbers.

We will check if the float is negative and in that case we will have to:

- Invert all bits values using the not bitwise operator "~".

- Set again the first bit to 1 using the OR bitwise operator "|". We will use as one of the either two operands a bit sequence with the first bit 1 and the other zero. In hexadecimal notation this 32bit sequence is 0x80000000.

Finally here is the function:

Of course even if less readable we can write it as a macro.

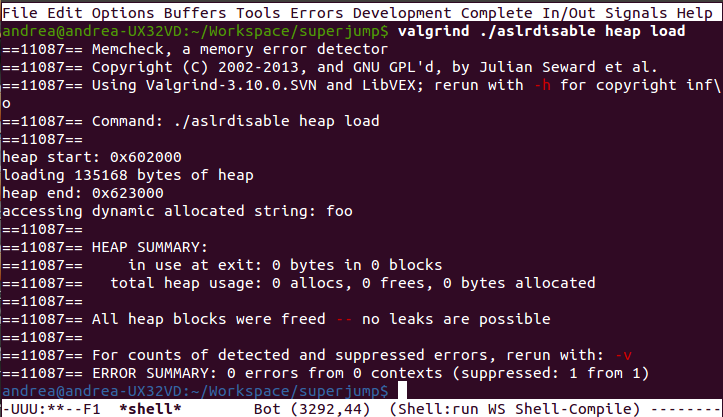

And here is the test program with the macro version in it.

As you can see I'm extracting a random bit sequence to use as source. I'm also paying attention to verify that every bit sequence that I'm going to use is a valid floating point bit sequence, using the != operator.

Conclusions: Also if I know I'm not the first using some ideas similar to this one, I think it was a quite funny use case to introduce you to number encoding and to a couple of bitwise operators.

But most important I think we've learned that sometimes to solve a problem we can change the encode method instead the algorithm that processes the data created with that.

The small code examples can be also cloned from here.

NOTE: A colleague of mine has correctly pointed out that I didn't treated denormalized numbers:

A denormalize number is a floating point number in wich all the exponent bits are zeroed. In that case to decode the number simply we don't add one to the mantissa value. Denormalized number has been made to encode such a small numbers that the minimum exponent (-126) is not sufficient for the encoding. In that way is possible to encode smaller number paying the price in precision.

Again here is the standard for more details.

Because the smaller normalized number is bigger than the bigger denormalized one left bits are always more important then the right one, for this reason this method for the comparison works also in this case (as proved by the source test provided).

The small code examples can be also cloned from here.

NOTE: A colleague of mine has correctly pointed out that I didn't treated denormalized numbers:

A denormalize number is a floating point number in wich all the exponent bits are zeroed. In that case to decode the number simply we don't add one to the mantissa value. Denormalized number has been made to encode such a small numbers that the minimum exponent (-126) is not sufficient for the encoding. In that way is possible to encode smaller number paying the price in precision.

Again here is the standard for more details.

Because the smaller normalized number is bigger than the bigger denormalized one left bits are always more important then the right one, for this reason this method for the comparison works also in this case (as proved by the source test provided).